Salmon Vision: The AI revolution comes to salmon conservation

Bringing the power of artificial intelligence to bear on monitoring anadromous salmon populations for faster and more efficient counts, toward more resilient watersheds

𓆟 𓆝 𓆟

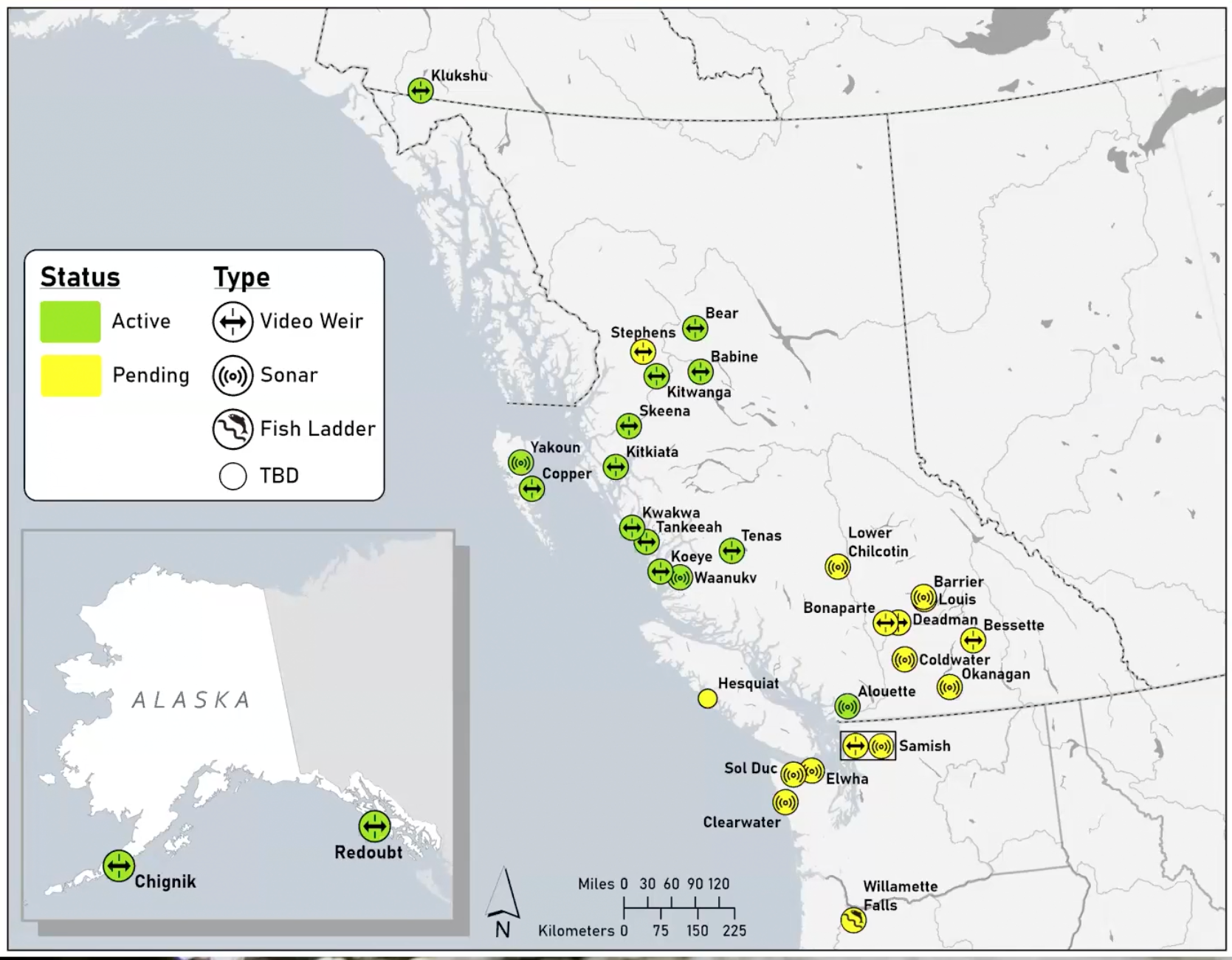

In sixteen sites across Alaska and British Columbia this year, artificial intelligence is replacing one of fisheries biology's most tedious tasks: manually counting migrating salmon. Moreover, it's doing it accurately—and at a cheaper price tag—than ever before.

By 2028, the Wild Salmon Center plans to scale its Salmon Vision system to 100 watersheds—potentially revolutionizing how biologists monitor fish populations.

Dr. William Atlas, WSC's senior salmon watershed scientist, and Audie Paulus, senior development manager introduced Salmon Vision to the public in a web presentation on July 16th.

The problem: manual fish counting

Anadromous fish are born in rivers, then go to the ocean and come back to their natal streams to spawn. Governments and NGOs spend millions every year to bolster fish populations. Knowing how many successfully spawn is critical to making the right management choices.

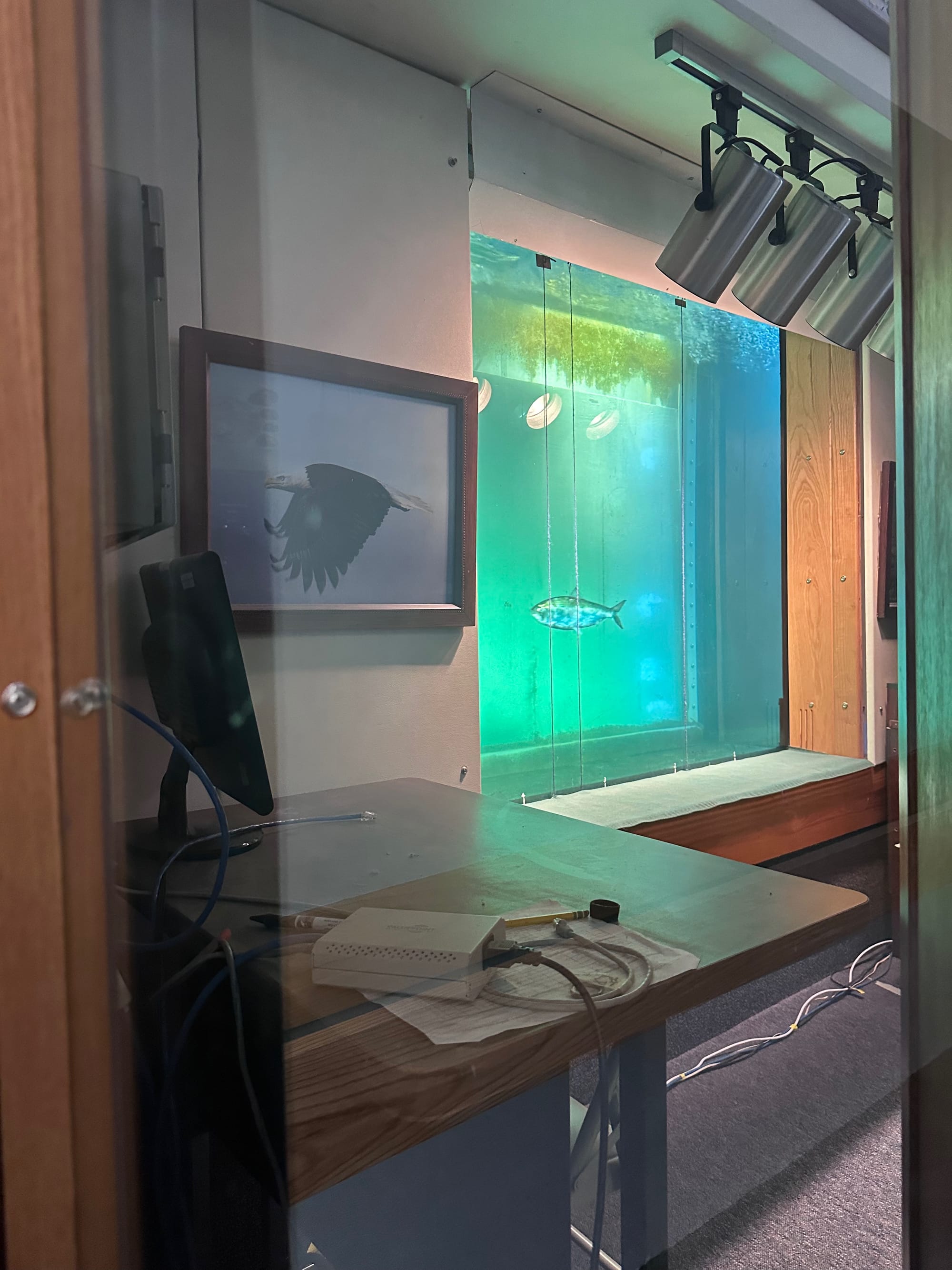

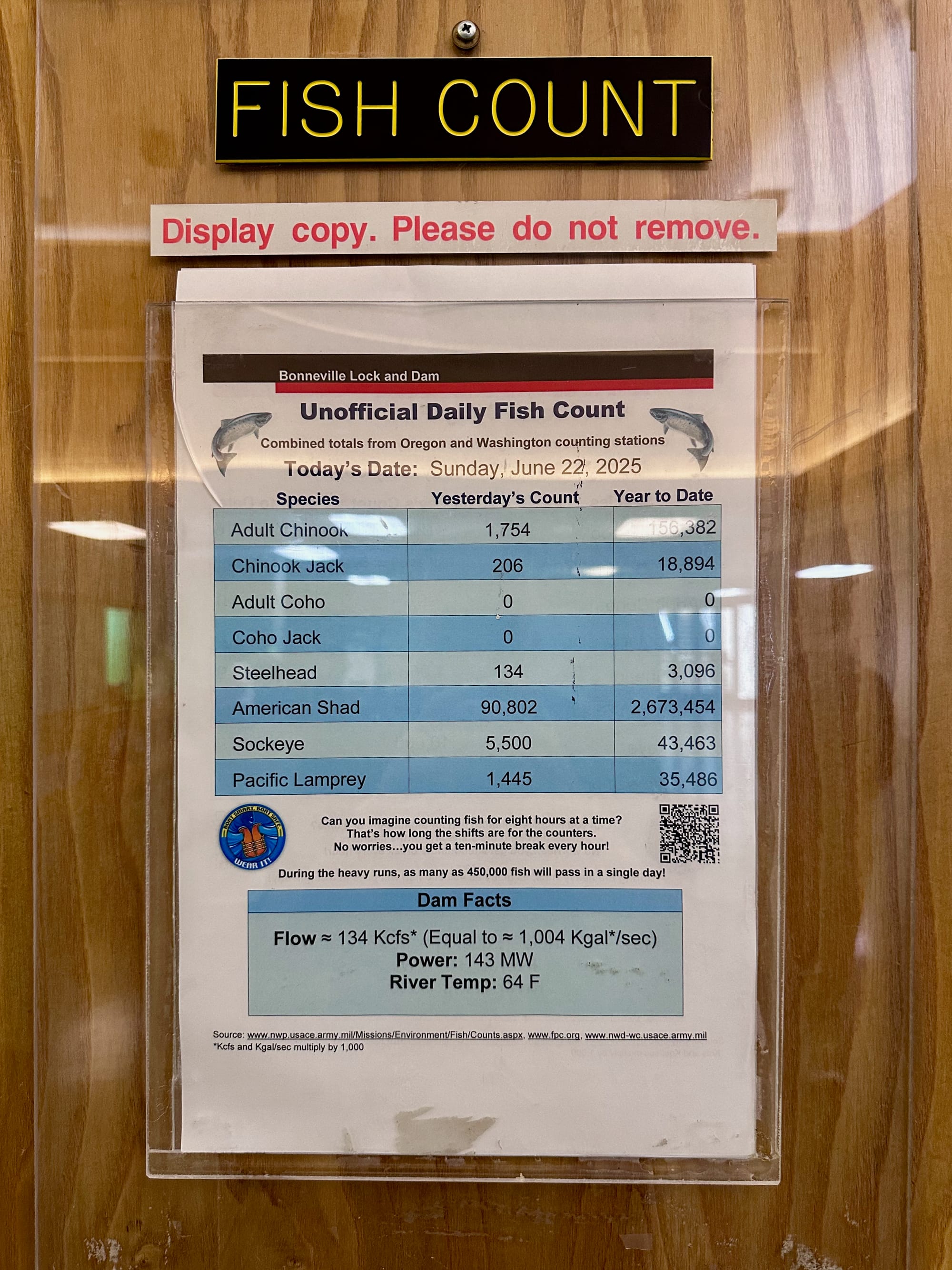

Counting fish has long been a manual task. Visit Bonneville Dam's counting room, where fish biologists watch the ladder. At night, video recordings capture fish moving. They're later reviewed, and counts updated. These counts determine opening seasons for sport fishing and salmon harvest.

We've just passed a limbo period in the Deschutes summer steelhead fishery where its status—open or not—relies on the count. It'll be open this year, but here's an earlier video with Amy Hazel of the Deschutes Angler describing why it's so important. Most steelheaders have the fish count page bookmarked. Knowing how fish are moving can help find elusive chrome.

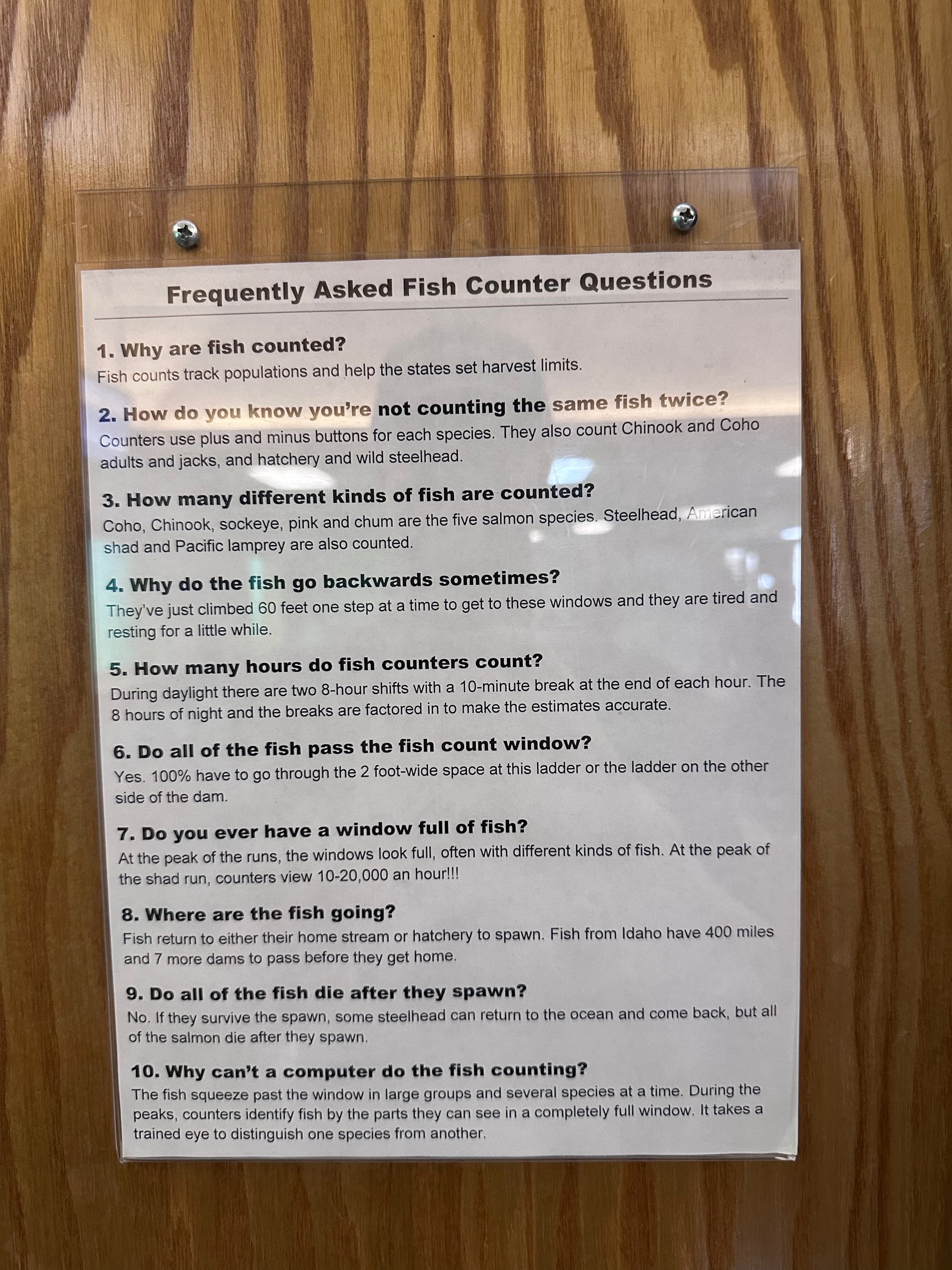

The fish counting sausage being made at Bonneville Dam. Note FAQ number 10. "Why can't a computer do the fish counting?", and the idea of an eight hour shift with a ten-minute break every hour, counting fish. Shad for emphasis. One of 90,000+ that, according to the count, passed in the prior period.

In free-flowing watersheds with larger runs, like in British Columbia and Alaska, sonar, counting towers, and traditional fish weirs—traps that hold fish for counting—are used. Here's a great explanation of what the work at a fish weir is like. It's remote, manual, and monotonous. Aerial surveillance is sometimes used to get a sense of how many fish are moving through the system. Many of the most prolific runs don't rely on precise counts.

Salmon Vision's potential is to help fisheries managers get a quicker idea of salmon returns. Better counts will impact subsistence fishing, sport fishing, and broader conservation.

Inside the Salmon Vision system

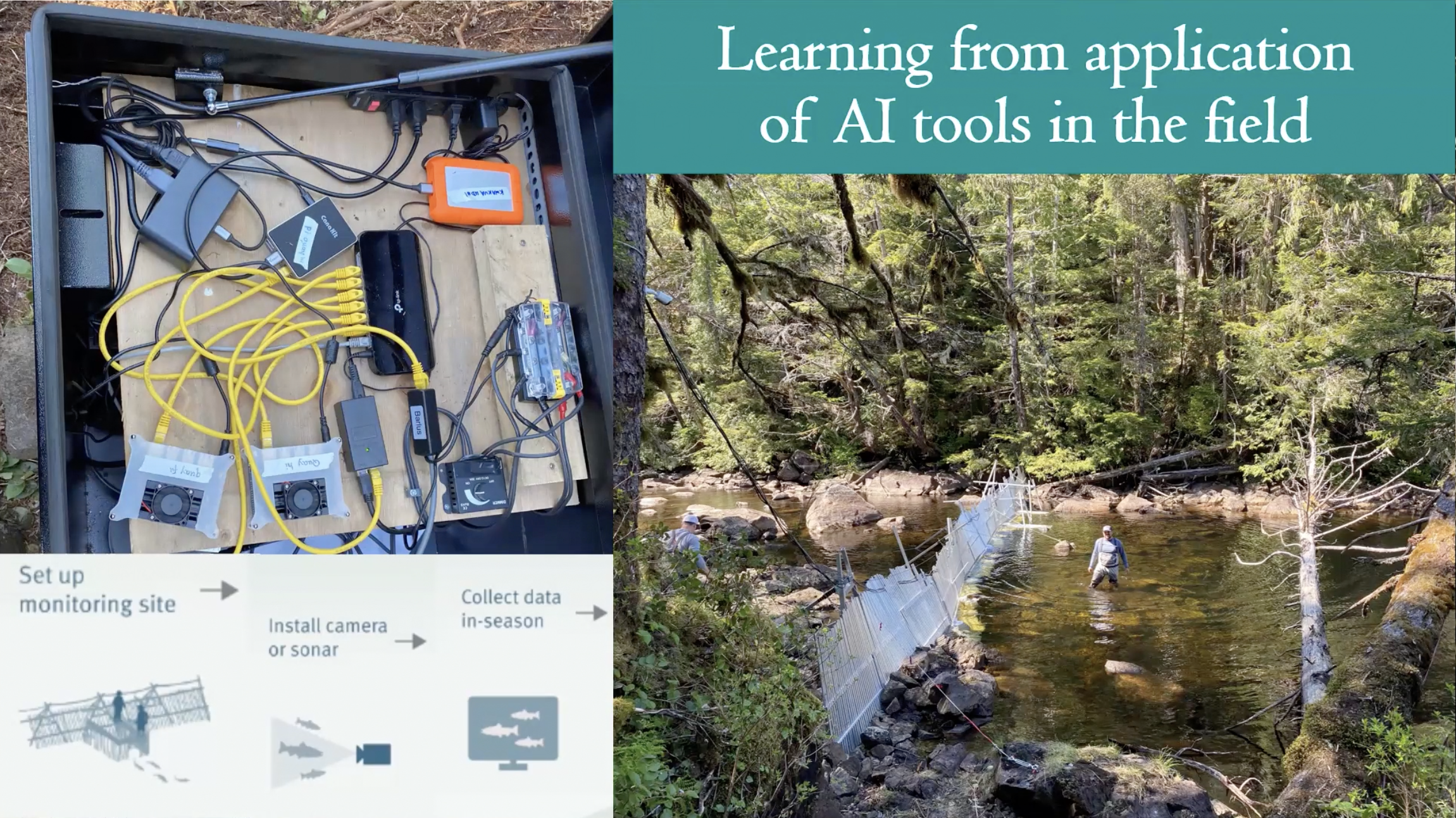

The system combines underwater cameras or sonar with AI-powered fish identification software. Remote stations run on solar-power and can operate for months without human intervention.

1. Set up monitoring site

2. Install camera or sonar

3. Collect data in-season

4. Use video data to train computer vision models

5. Train computer to count and ID salmon

Here's the simplest possible description of how it all works: As fish swim through the weir, video or sonar picks them up. Relevant video frames or data are extracted, stored, and analyzed. The fish is identified. It's then added to a database researchers can access and verify through an app.

"This work aims to harness the power of artificial intelligence to advance more rapid and efficient analysis of salmon population monitoring data," Dr. Atlas said. This offers "in-season insight that can advance adaptive and precautionary fishery management."

Right now, some sites send data to the cloud to be processed. Soon they'll be crunching numbers stream-side. The team is deploying the first Salmon Vision instances with edge systems. Edge processors are large, high-performance chips that do computation on-site. They send distilled fish counts to the cloud, not raw video data. This reduces costs on satellite comms bandwidth and cloud servers.

Using AI to identify migrating fish

Unlike generative AI creates text or images, Salmon Vision uses computer vision—the same technology that helps your phone recognize faces in photos.

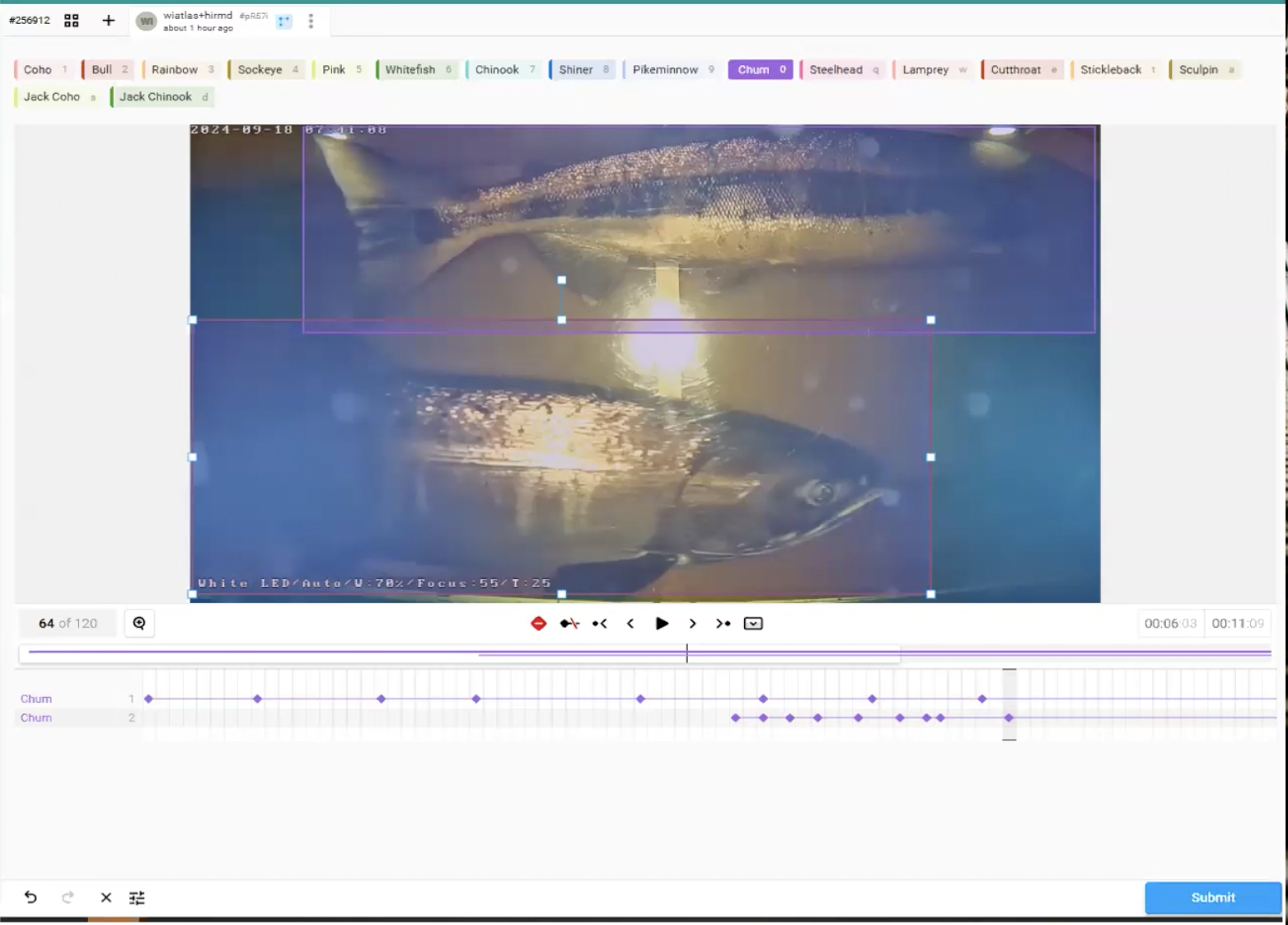

AI systems are only as good as their datasets. As they produce more output, they re-ingest data to train and refine their models. A big dataset—and plans to feed it—is what sets Salmon Vision apart. Its initial training data included "1,567 salmon videos ranging from several minutes to an hour long with 532,000 labeled frames and 15 unique species-class labels." The initial paper explaining the system authored by Atlas and the team appeared in Frontiers in Marine Science in 2023 ("Wild salmon enumeration and monitoring using deep learning empowered detection and tracking").

The initial training data performed pretty well in the field, in line with the group's research findings from its paper. There, the top performing model "achieved a mean average precision (mAP) of 67.6%, and species-specific mAP scores > 90% for coho and > 80% for sockeye salmon when trained with a combined dataset of Kitwanga and Bear Rivers' salmon annotations." Now, more than 2.5 million annotated frames of video and sonar span projects. The team expects another million new frames by the end of this summer.

The system uses YOLO, or You Only Look Once, a proven object detection framework that can identify and track multiple fish species in real-time.

It runs with a custom CV model trained to detect and track various species. The model can also identify multiple objects in a single frame. It's being trained to determine sex, if a fish's adipose fin is clipped, and injured fish.

A human-driven count, or reviewing footage or sonar data, takes thousands of person-hours. The data isn't available fully compiled until after the fishing season is over. The Salmon Vision system can process an hour of video in one minute. Human-driven counts slow fish migration. A scientist has to open the weir and count the fish, one by one, as they move through. "Video weirs tend to reduce migration delays fairly dramatically, when compared to sort of traditional trap-associated weirs," according to Atlas. "We've had 3000 sockeye come through one of our fences in a single day.

The team has also been able to reduce costs significantly. Builds that used to cost upward of $50k now run $10k for a video box with edge processing system, and $5k for a video system alone. Whooshh Innovations in the U.S. and Ocean AID in Canada are the Salmon Vision build and hardware partners.

Ideally, efficiencies free researchers up to do more intensive work elsewhere. Tasks that are more person-reliant than counting fish. To say nothing of the lack of expenditure for fuel and supplies. "Flying a helicopter or float plane into these sites to perform one count is also incredibly fossil fuel intensive," Atlas says. "And so we have to take a full account...what are the environmental costs of this work? What is the most efficient way we can do that?"

Why bringing AI to fish counts matters

Beyond increased efficiency, two factors make Salmon Vision especially significant. The first is that the system works using stable and established AI models. An almost incomprehensible amount of money is being thrown at novel AI in the hopes that it can subsume human activity in all sorts of areas of life. When put up against this cavalcade of buzzword-y innovation (like "agentic AI"), using a CV model to count fish seems quaint. Atlas put it better in the webinar: "Salmon conservation being a relatively small industry has not attracted the type of focus from big tech companies pushing the AI envelope."

The second is a strong sense of co-development with distributed partners, and respect for tribal sovereignty. This partnership-based approach feels like a humane way to deploy technology. It has the potential to put power in the hands of people who aren't at the center of the AI conversation. The labeling web app, for example, was developed alongside local partners. The same partners who'd be using it to refine system outputs and monitor data collection. "We're also inspired by the opportunity to take a different kind of approach to AI, centering the process of co-development with local and indigenous partners to create a trusted set of tools," Atlas said, and cited in the webinar the essential nature of salmon returns to local communities. "There is a real and urgent need to empower local and indigenous communities with in season information on the status of salmon populations returning to their watersheds." He gave the example of Heiltsuk Nation staff, who harvest 500-1,000 sockeye annually at their weir, distributing fish to households, schools, daycares, and hospitals in Bella Bella, British Columbia.

In another instance, federal funding cuts withdrew resources for staffing the Readout Lake weir. It supports the largest sockeye subsistence fishery in southeast Alaska. The Sitka tribe stepped up and took over leadership of the project.

Seeing (salmon) like a state

Salmon Vision represents a technological achievement with clear conservation benefits. But, as with any case of quantification of the natural world's "resources" it's worth thinking about unintended consequences. One lens to use is that of James C. Scott's Seeing Like a State, a CFS favorite. Scott's argument in the book is state power often depends on making local systems "legible"—readable and quantifiable—to outside authorities. This, he argues, has led to widespread failure across a range of historical attempts to capture and control local processes.

While Indigenous communities manage salmon based on holistic, culturally-informed observations, ultra-precise AI counts might shift decision-making power toward external numerical thresholds, away from traditional ecological knowledge. Dr. Atlas notes that salmon are "relatives under the water" to the Sauk-Suiattle people. This sacred relationship doesn't "scale" linearly with numbers, to use the language of technology. A thousand salmon aren't 10x more spiritually significant than 100.

These concerns don't negate Salmon Vision's potential benefits, but they suggest important safeguards. For instance, data sovereignty agreements where communities retain ownership over their data, or are guaranteed continued access to broader datasets.

What comes next?

This year's deployments will create new training data. This data will help the team reach its benchmark for operational deployment. Set in their initial paper, they consider mAP scores exceeding 0.90 "a high but achievable bar for model performance."

The Salmon Vision team is making its technology available for other groups to use, and is forging new partnerships.

Future projects and R&D efforts involve training the model to automate the count of outgoing smolts. Scale aging, the process by which the age of an anadromous fish is determined, is another potential area Salmon Vision might expand.

The feature backlog and R&D wishlist will be more achievable with additional funding. The team is looking for funders and partnerships to support ongoing development, growth and maintenance. "We're actively fundraising to get resources in place to get this technology to as many watersheds as we can," Atlas says. "If these tools that we're building at the Salmon Vision Collaborative seem like something that could benefit your work, please don't hesitate to reach out."

The next two years will determine whether Salmon Vision can scale from promising pilot to conservation infrastructure. With data coming in and partnerships expanding, the technology seems ready to transform salmon monitoring from a labor-intensive slog to an automated early warning system for some of the Pacific's most critical fisheries.

Contact Atlas and the team at the Wild Salmon Center at their website.

You can watch the whole webinar introducing Salmon Vision below:

Have you ever worked at a weir counting fish before? What was it like?

Is this a welcome advance, or are we losing some judgement or technique that's essentially human?

Let us know in the comments.

𓆟 𓆝 𓆟

Related articles: